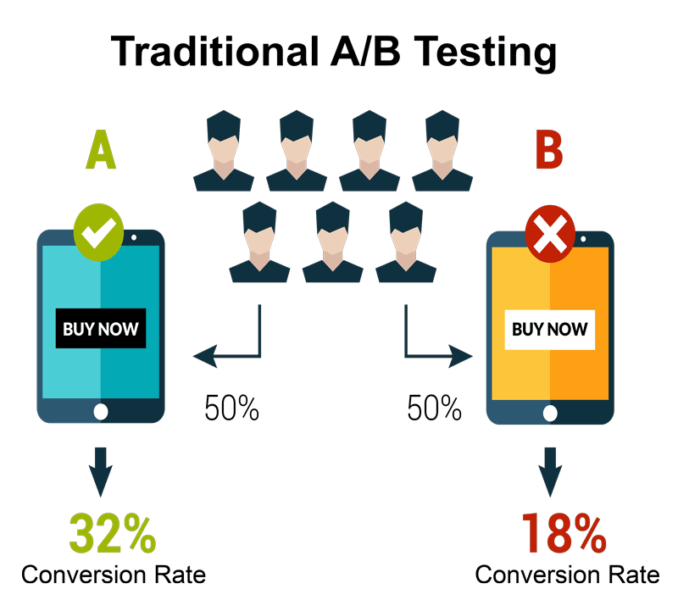

A/B testing is a way of determining which of two variants is more effective in reaching a goal. This typically involves serving one of two variations of some marketing collateral and can be used in almost every channel, including: emails, social media posts/ads, Google Ads, landing pages, videos, logos, etc. However, testing the effectiveness of each variant requires having clearly defined goals. The classic example involves sending traffic to two different landing pages randomly. One page would have a green call to action button. The other would have a red call to action button. If significantly more people clicked on the red button then it would win the test. There are two things that are important to remember with these tests:

All other things between the variants should be the same. In our example above, the only thing that was different was the button color. For tests that involve more than 2 variants see multi-variant testing.

You need a large enough sample size to make the results statistically significant. The size of the sample will vary with every test and depends largely on the size of difference in the results. If you tend to have a lot of conversions as a default then you won’t need as large of a sample size as you would if you only get a 5% conversion rate by default. In general, the more conversions you have and the bigger the gap is between the conversion rate of the two variations then higher your confidence can be in the results being generally true for the larger population.

Google Analytics and Mailchimp both offer A/B testing features that are relatively easy to setup.